Summary

I spent about two and a half years as a research analyst at GiveWell. For most of my time there, I was the point person on GiveWell’s main cost-effectiveness analyses. I’ve come to believe there are serious, underappreciated issues with the methods the effective altruism (EA) community at large uses to prioritize causes and programs. While effective altruists approach prioritization in a number of different ways, most approaches involve (a) roughly estimating the possible impacts funding opportunities could have and (b) assessing the probability that possible impacts will be realized if an opportunity is funded.

I discuss the phenomenon of the optimizer’s curse: when assessments of activities’ impacts are uncertain, engaging in the activities that look most promising will tend to have a smaller impact than anticipated. I argue that the optimizer’s curse should be extremely concerning when prioritizing among funding opportunities that involve substantial, poorly understood uncertainty. I further argue that proposed Bayesian approaches to avoiding the optimizer’s curse are often unrealistic. I maintain that it is a mistake to try and understand all uncertainty in terms of precise probability estimates.

This post is long, so I’ve separated it into several sections:

- The optimizer’s curse

- Models, wrong-way reductions, and probability

- Hazy probabilities and prioritization

- Bayesian wrong-way reductions

- Doing better

Part 1: The optimizer’s curse

The counterintuitive phenomenon of the optimizer’s curse was first formally recognized in Smith & Winkler 2006.

Here’s a rough sketch:

- Optimizers start by calculating the expected value of different activities.

- Estimates of expected value involve uncertainty.

- Sometimes expected value is overestimated, sometimes expected value is underestimated.

- Optimizers aim to engage in activities with the highest expected values.

- Result: Optimizers tend to select activities with overestimated expected value.

Smith and Winkler refer to the difference between the expected value of an activity and its realized value as “postdecision surprise.”

The optimizer’s curse occurs even in scenarios where estimates of expected value are unbiased (roughly, where any given estimate is as likely to be too optimistic as it is to be too pessimistic[1]). When estimates are biased—which they typically are in the real world—the magnitude of the postdecision surprise may increase.

A huge problem for effective altruists facing uncertainty

In a simple model, I show how an optimizer with only moderate uncertainty about factors that determine opportunities’ cost-effectiveness may dramatically overestimate the cost-effectiveness of the opportunity that appears most promising. As uncertainty increases, the degree to which the cost-effectiveness of the optimal-looking program is overstated grows wildly.

I believe effective altruists should find this extremely concerning. They’ve considered a large number of causes. They often have massive uncertainty about the true importance of causes they’ve prioritized. For example, GiveWell acknowledges substantial uncertainty about the impact of deworming programs it recommends, and the Open Philanthropy Project pursues a high-risk, high-reward grantmaking strategy.

The optimizer’s curse can show up even in situations where effective altruists’ prioritization decisions don’t involve formal models or explicit estimates of expected value. Someone informally assessing philanthropic opportunities in a linear manner might have a thought like:

Let me compare that to how I feel about some other funding opportunities…

Although the thinking is informal, there’s uncertainty, potential for bias, and an optimization-like process.[2]

Previously proposed solution

The optimizer’s curse hasn’t gone unnoticed by impact-oriented philanthropists. Luke Muehlhauser, a senior research analyst at the Open Philanthropy Project and the former executive director of the Machine Intelligence Research Institute, wrote an article titled The Optimizer’s Curse and How to Beat It. Holden Karnofsky, the co-founder of GiveWell and the CEO of the Open Philanthropy Project, wrote Why we can’t take expected value estimates literally. While Karnofsky didn’t directly mention the phenomenon of the optimizer’s curse, he covered closely related concepts.

Both Muehlhauser and Karnofsky suggested that the solution to the problem is to make Bayesian adjustments. Muehlhauser described this solution as “straightforward.”[3] Karnofsky seemed to think Bayesian adjustments should be made, but he acknowledged serious difficulties involved in making explicit, formal adjustments.[4] Bayesian adjustments are also proposed in Smith & Winkler 2006.[5]

Here’s what Smith & Winkler propose (I recommend skipping it if you’re not a statistics buff):[6]

For entities with lots of past data on both the (a) expected values of activities and (b) precisely measured, realized values of the same activities, this may be an excellent solution.

In most scenarios where effective altruists encounter the optimizer’s curse, this solution is unworkable. The necessary data doesn’t exist.[7] The impact of most philanthropic programs has not been rigorously measured. Most funding decisions are not made on the basis of explicit expected value estimates. Many causes effective altruists are interested in are novel: there have never been opportunities to collect the necessary data.

The alternatives I’ve heard effective altruists propose involve attempts to approximate data-driven Bayesian adjustments as well as possible given the lack of data. I believe these alternatives either don’t generally work in practice or aren’t worth calling Bayesian.

To make my case, I’m going to first segue into some other topics.

Part 2: Models, wrong-way reductions, and probability

Models

In my experience, members of the effective altruism community are far more likely than the typical person to try to understand the world (and make decisions) on the basis of abstract models.[8] I don’t think enough effort goes into considering when (if ever) these abstract models cease to be appropriate for application.

This post’s opening quote comes from a great blog post by John D Cook. In the post, Cook explains how Euclidean geometry is a great model for estimating the area of a football field—multiply field_length * field_width and you’ll get a result that’s pretty much exactly the field’s area. However, Euclidean geometry ceases to be a reliable model when calculating the area of truly massive spaces—the curvature of the earth gets in the way.[9] Most models work the same way. Here’s how Cook ends his blog post:[10]

Most models do not scale up or down over anywhere near as many orders of magnitude as Euclidean geometry or Newtonian physics. If a dose-response curve, for example, is linear for observations in the range of 10 to 100 milligrams, nobody in his right mind would expect the curve to remain linear for doses up to a kilogram. It wouldn’t be surprising to find out that linearity breaks down before you get to 200 milligrams.

Wrong-way reductions

In a brilliant article, David Chapman coins the term “wrong-way reduction” to describe an error people commit when they propose tackling a complicated, hard problem with an apparently simple solution that, on further inspection, turns out to be more problematic than the initial problem. Chapman points out that regular people rarely make this kind of error. Usually, wrong-way reductions are motivated errors committed by people in fields like philosophy, theology, and cognitive science.

The problematic solutions wrong-way reductions offer often take this form:

People advocating wrong-way reductions often gloss over the fact that their proposed solutions require something we don’t have or engage in intellectual gymnastics to come up with something that can act as a proxy for the thing we don’t have. In most cases, these intellectual gymnastics strike outsiders as ridiculous but come off more convincing to people who’ve accepted the ideology that motivated the wrong-way reduction.

A wrong-way reduction is often an attempt to universalize an approach that works in a limited set of situations. Put another way, wrong-way reductions involve stretching a model way beyond the domains it’s known to work in.

An example

I spent a lot of my childhood in evangelical, Christian communities. Many of my teachers and church leaders subscribed to the idea that the Bible was the literal, infallible word of God. If you presented some of these people with questions about how to live or how to handle problems, they’d encourage you to turn to the Bible.[11]

In some cases, the Bible offered fairly clear guidance. When faced with the question of whether one should worship the Judeo-Christian God, the commandment, “You shall have no other gods before me”[12] gives a clear answer. Other parts of the Bible are consistent with that commandment. However, “follow the Bible” ends up as a wrong-way reduction because the Bible doesn’t give clear answers to most of the questions that fall under the umbrella of “How should one live?”

Is abortion OK? One of the Ten Commandments states, “You shall not murder.”[13] But then there are other passages that advocate execution.[14] How similar are abortion, execution, and murder anyway?

Should one continue dating a significant other? Start a business? It’s not clear where to start with those questions.

I intentionally used an example that I don’t think will ruffle too many readers’ feathers, but imagine for a minute what it’s like to be a person who subscribes to the idea that the Bible is a complete and infallible guide:

You’re likely in a close-knit community with like-minded people. Intelligent and respected members of the community regularly turn to the Bible for advice and encourage you to do the same.

When you have doubts about the coherence of your worldview, there’s someone smarter than you in the church community you can consult. The wise church member has almost certainly heard concerns similar to yours before and can explain why the apparent issues or inconsistencies you’ve run into may not be what they seem.

A mainstream Christian from outside the community probably wouldn’t find the rationales offered by the church member compelling. An individual who’s already in the community is more easily convinced.[15]

Probability

The idea that all uncertainty must be explainable in terms of probability is a wrong-way reduction. Getting more detailed, the idea that if one knows the probabilities and utilities of all outcomes, then she can always behave rationally in pursuit of her goals is a wrong-way reduction.

It’s not a novel proposal. People have been saying versions of this for a long time. The term Knightian uncertainty is often used to distinguish quantifiable risk from unquantifiable uncertainty.

As I’ll illustrate later, we don’t need to assume a strict dichotomy separates quantifiable risks from unquantifiable risks. Instead, real-world uncertainty falls on something like a spectrum.

Nate Soares, the executive director of the Machine Intelligence Research Institute, wrote a post on LessWrong that demonstrates the wrong-way reduction I’m concerned about. He writes:[16]

I don’t think ignorance must cash out as a probability distribution. I don’t have to use probabilistic decision theory to decide how to act.

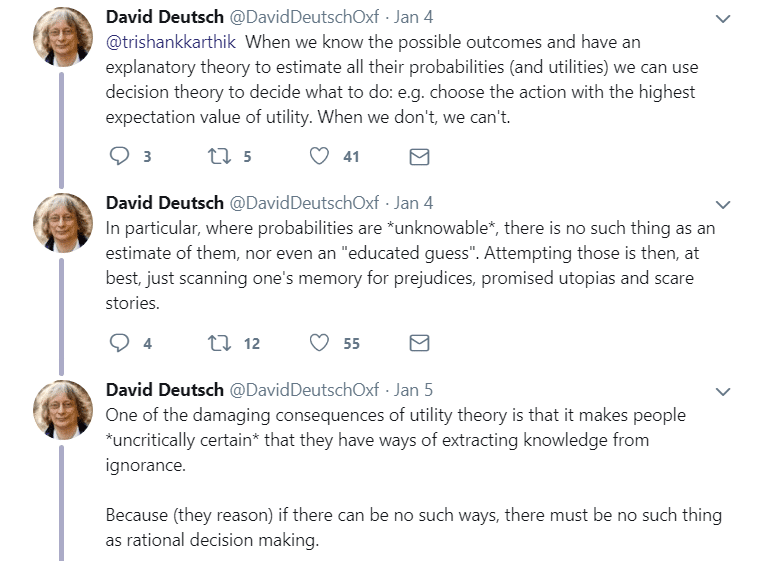

Here’s the physicist David Deutsch tweeting on a related topic:

What is probability?

Probability is, as far as we know, an abstract mathematical concept. It doesn’t exist in the physical world of our everyday experience.[17] However, probability has useful, real-world applications. It can aid in describing and dealing with many types of uncertainty.

I’m not a statistician or a philosopher. I don’t expect anyone to accept that position based on my authority. That said, I believe I’m in good company. Here’s an excerpt from Bayesian statistician Andrew Gelman on the same topic:[18]

Consider a handful of statements that involve probabilities:

- A hypothetical fair coin tossed in a fair manner has a 50% chance of coming up heads.

- When two buddies at a bar flip a coin to decide who buys the next round, each person has a 50% chance of winning.

- Experts believe there’s a 20% chance the cost of a gallon of gasoline will be higher than $3.00 by this time next year.

- Dr. Paulson thinks there’s an 80% chance that Moore’s Law will continue to hold over the next 5 years.

- Dr. Johnson thinks there’s a 20% chance quantum computers will commonly be used to solve everyday problems by 2100.

- Kyle is an atheist. When asked what odds he places on the possibility that an all-powerful god exists, he says “2%.”

I’d argue that the degree to which probability is a useful tool for understanding uncertainty declines as you descend the list.

- The first statement is tautological. When I describe something as “fair,” I mean that it perfectly conforms to abstract probability theory.

- In the early statements, the probability estimates can be informed by past experiences with similar situations and explanatory theories.

- In the final statement, I don’t know what to make of the probability estimate.

The hypothetical atheist from the final statement, Kyle, wouldn’t be able to draw on past experiences with different realities (i.e., Kyle didn’t previously experience a bunch of realities and learn that some of them had all-powerful gods while others didn’t). If you push someone like Kyle to explain why they chose 2% rather than 4% or 0.5%, you almost certainly won’t get a clear explanation.

If you gave the same “What probability do you place on the existence of an all-powerful god?” question to a number of self-proclaimed atheists, you’d probably get a wide range of answers.[19]

I bet you’d find that some people would give answers like 10%, others 1%, and others 0.001%. While these probabilities can all be described as “low,” they differ by orders of magnitude. If probabilities like these are used alongside probabilistic decision models, they could have extremely different implications. Going forward, I’m going to call probability estimates like these “hazy probabilities.”

Placing hazy probabilities on the same footing as better-grounded probabilities (e.g., the odds a coin comes up heads) can lead to problems.

Part 3: Hazy probabilities and prioritization

Probabilities that feel somewhat hazy show up frequently in prioritization work that effective altruists engage in. Because I’m especially familiar with GiveWell’s work, I’ll draw on it for an illustrative example.[20] GiveWell’s rationale for recommending charities that treat parasitic worm infections hinges on follow-ups to a single study. Findings from these follow-ups are suggestive of large, long-term income gains for individuals that received deworming treatments as children.[21]

There were a lot of odd things about the study that make extrapolating to form expectations about the effect of deworming in today’s programs difficult.[22] To arrive at a bottom-line estimate of deworming’s cost-effectiveness, GiveWell assigns explicit, numerical values in multiple hazy-feeling situations. GiveWell faces similar haziness when modeling the impact of some other interventions it considers.[23]

While GiveWell’s funding decisions aren’t made exclusively on the basis of its cost-effectiveness models, they play a significant role. Haziness also affects other, less-quantitative assessments GiveWell makes when deciding what programs to fund. That said, the level of haziness GiveWell deals with is minor in comparison to what other parts of the effective altruism community encounter.

Hazy, extreme events

There are a lot of earth-shattering events that could happen and revolutionary technologies that may be developed in my lifetime. In most cases, I would struggle to place precise numbers on the probability of these occurrences.

Some examples:

- A pandemic that wipes out the entire human race

- An all-out nuclear war with no survivors

- Advanced molecular nanotechnology

- Superhuman artificial intelligence

- Catastrophic climate change that leaves no survivors

- Whole-brain emulations

- Complete ability to stop and reverse biological aging

- Eternal bliss that’s granted only to believers in Thing X

You could come up with tons more.

I have rough feelings about the plausibility of each scenario, but I would struggle to translate any of these feelings into precise probability estimates. Putting probabilities on these outcomes seems a bit like the earlier example of an atheist trying to precisely state the probability he or she places on a god’s existence.

If I force myself to put numbers on things, I have thoughts like this:

An effective altruist might make a bunch of rough judgments about the likelihood of scenarios like those above, combine those probabilities with extremely hazy estimates about the impact she could have in each scenario and then decide which issue or issues should be prioritized. Indeed, I think this is more or less what the effective altruism community has done over the last decade.

When many hazy assessments are made, I think it’s quite likely that some activities that appear promising will only appear that way due to ignorance, inability to quantify uncertainty, or error.

Part 4: Bayesian wrong-way reductions

I believe the proposals effective altruists have made for salvaging general, Bayesian solutions to the optimizer’s curse are wrong-way reductions.

To make a Bayesian adjustment, it’s necessary to have a prior (roughly, a probability distribution that captures initial expectations about a scenario). As I mentioned earlier, effective altruists will rarely have the information necessary to create well-grounded, data-driven priors. To get around the lack of data, people propose coming up with priors in other ways.

For example, when there is serious uncertainty about the probabilities of different outcomes, people sometimes propose assuming that each possible outcome is equally probable. In some scenarios, this is a great heuristic.[24] In other situations, it’s a terrible approach.[25] To put it simply, a state of ignorance is not a probability distribution.

Karnofsky suggests a different approach (emphasis mine):[26]

I agree with Karnofsky that we should take our intuitions seriously, but I don’t think intuitions need to correspond to well-defined mathematical structures. Karnofsky maintains that Bayesian adjustments to expected value estimates “can rarely be made (reasonably) using an explicit, formal calculation.” I find this odd, and I think it may indicate that Karnofsky doesn’t really believe his intuitions cash out as priors. To make an explicit, Bayesian calculation, a prior doesn’t need to be well-justified. If one is capable of drawing or describing a prior distribution, a formal calculation can be made.

I agree with many aspects of Karnofsky’s conclusions, but I don’t think what Karnofsky is advocating should be called Bayesian. It’s closer to standard reasonableness and critical thinking in the face of poorly understood uncertainty. Calling Karnofsky’s suggested process “making a Bayesian adjustment” suggests that we have something like a general, mathematical method for critical thinking. We don’t.

Similarly, taking our hunches about the plausibility of scenarios we have a very limited understanding of and treating those hunches like well-grounded probabilities can lead us to believe we have a well-understood method for making good decisions related to those scenarios. We don’t.

Many people have unwarranted confidence in approaches that appear math-heavy or scientific. In my experience, effective altruists are not immune to that bias.

Part 5: Doing better

When discussing these ideas with members of the effective altruism community, I felt that people wanted me to propose a formulaic solution—some way to explicitly adjust expected value estimates that would restore the integrity of the usual prioritization methods. I don’t have any suggestions of that sort.

Below I outline a few ideas for how effective altruists might be able to pursue their goals despite the optimizer’s curse and difficulties involved in probabilistic assessments.

Embrace model skepticism

When models are being pushed outside of the domains where they have been built and tested, caution should be exercised. Especial skepticism should be used in situations where a model is presented as a universal method for handling problems.

Entertain multiple models

If an opportunity looks promising under a number of different models, it’s more likely to be a good opportunity than one that looks promising under a single model.[27] It’s worth trying to foster several different mental models for making sense of the world. For the same reason, surveying experts about the value of funding opportunities may be extremely useful. Some experts will operate with different models and thinking styles than I do. Where my models have blind spots, their models may not.

Test models

One of the ways we figure out how far models can reach is through application in varied settings. I don’t believe I have a 50-50 chance of winning a coin flip with a buddy for exclusively theoretical reasons. I’ve experienced a lot of coin flips in my life. I’ve won about half of them. By funding opportunities that involve feedback loops (allowing impact to be observed and measured in the short term), a lot can be learned about how well models work and when probability estimates can be made reliably.

Learn more

When probability assessments feel hazy, the haziness often stems from lack of knowledge about the subject under consideration. Acquiring a deep understanding of a subject may eliminate some haziness.

Position society

Since it isn’t possible to know the probability of all important developments that may happen in the future, it’s prudent to put society in a good position to handle future problems when they arise.[28]

Acknowledge difficulty

I know the ideas I’m proposing for doing better are not novel or necessarily easy to put into practice. Despite that, recognizing that we don’t have a reliable, universal formula for making good decisions under uncertainty has a lot of value.

In my experience, effective altruists are unusually skeptical of conventional wisdom, tradition, intuition, and similar concepts. Effective altruists correctly recognize deficiencies in decision-making based on these concepts. I hope that they’ll come to accept that, like other approaches, decision-making based on probability and expected value has limitations.

Huge thanks to everyone who reviewed drafts of this post or had conversations with me about these topics over the last few years!

Added 4/6/2019: There’s been discussion and debate about this post over on the Effective Altruism Forum.

Footnotes

- This isn’t quite right. See Wikipedia’s “Bias of an estimator” article for a more technical treatment.

- Informal thinking isn’t always this linear. If the informal thinking considers an opportunity from multiple perspectives, draws on intuitions, etc., the risk of postdecision surprise may be reduced.

- “The solution to the optimizer’s curse is rather straightforward.”

From The Optimizer’s Curse and How to Beat It (archived here). - I’ll discuss Karnofsky’s proposal in more detail later in this post. Early in his post (archived here), Karnofsky writes:

“We believe that any estimate along these lines needs to be adjusted using a ‘Bayesian prior’; that this adjustment can rarely be made (reasonably) using an explicit, formal calculation; and that most attempts to do the latter, even when they seem to be making very conservative downward adjustments to the expected value of an opportunity, are not making nearly large enough downward adjustments to be consistent with the proper Bayesian approach.” - “We then propose the use of Bayesian methods to adjust value estimates. These Bayesian methods can be viewed as disciplined skepticism and provide a method for avoiding this postdecision disappointment.”

From the abstract of Smith & Winkler 2006. - From Smith and Winkler 2006, pages 315-316. The poorly rendered character is v-hat.

- I believe Karnofsky’s post is consistent with this view. In Why we can’t take expected value estimates literally (archived here), Karnofsky does not advocate drawing on real-world data as a realistic method for coming up with a prior.

- I expect most people who’ve interacted with members of the effective altruism community won’t need convincing on this point, so I’ve kept further thoughts on this point out of the main text. Here are a couple of examples:

- Effective altruism appeals to people who are OK with utilitarian-leaning ethics. Utilitarianism involves taking everything people care about and trying to capture that in an abstract, quantifiable metric called utility. People who subscribe to less-abstract, common-sense ethics often struggle to understand EA’s appeal.

- GiveWell uses explicit mathematical models as a key input in decisions about which programs to fund. Those models require abstractions. As far as I can tell, explicit models do not play such an essential role in the decisions made by most other major funders in global health and development.

- Effective altruists seem to take things like the near-term development of whole-brain emulation, cryonics, and Drexler-style molecular nanotechnology more seriously than many people. This may be due to a notion along the lines of “we’ve already done the hard work of coming up with the abstract models we need (i.e., standard physics) so the rest of the work is just engineering problems.” (To be clear, there are other plausible explanations for why effective altruists take these potential technologies unusually seriously.)

- I’m assuming here that we’re considering smooth surfaces found on sphere-like planets.

- The excerpt comes from Cook’s Following an idea to its logical conclusion post (archived here). I’ve removed a typo from the excerpted section.

- For what it’s worth, I don’t think these people were just offering flippant lip-service to their religion. They were really proposing using the Bible as a comprehensive guide on how to live.

- Exodus 20:3, New International Version

- Exodus 20:13, New International Version

- For example:

- “Anyone who curses their father or mother is to be put to death. Because they have cursed their father or mother, their blood will be on their own head.” Leviticus 20:9.

- “If a man has sexual relations with a man as one does with a woman, both of them have done what is detestable. They are to be put to death; their blood will be on their own heads.” Leviticus 20:13.

- My childhood peers generally had social circles that were less deeply intertwined with the Bible-centric evangelicals than the social circles of the teachers and leaders pushing extremely Bible-centric views. I think that goes a long way in explaining why most of my peers, while remaining religious, accepted the more mainstream view that deciding how to live and behave is difficult and worth approaching from a lot of different angles.

- From Nate Soares’ Knightian uncertainty in a Bayesian framework. Archived here.

- If you think I might be wrong here since probability may be in some sense intrinsic to quantum mechanics (rather than a thing used to describe observations), I suggest just ignoring this sentence. I don’t think the sentence is crucial to the central point of the post.

- The excerpt comes from a blog post by Andrew Gelman titled, “What is probability?” (archived here). I think the short post is worth reading in full.

- For the benefit of the doubt, let’s assume everyone you ask is intelligent, has a decent understanding of probability, and more or less agrees about what constitutes an all-powerful god.

- Due to my familiarity with GiveWell, I mention it in a lot of examples. I don’t think the issues I raise in this post should be more concerning for GiveWell than other organizations associated with the EA movement.

- The most recent, publicly available follow-up study is Baird et al. 2016. GiveWell’s rationale can be found in its deworming intervention report (archived here). This excerpt comes from that report:

“In our view, the most compelling case for deworming as a cost-effective intervention does not come directly from its subtle impacts on short-term health (which appear relatively minor and uncertain) nor from its potential reduction in severe symptoms of disease effects (which we believe to be rare), but from the possibility that deworming children has a subtle, lasting impact on their development and thus on their ability to be productive and successful throughout life.” -

Some of the factors that make interpretation and extrapolation difficult:

- Worm loads were way more intense during the study than they are in typical settings today.

- The study was randomized in an unconventional manner.

- The effect on income is large and not easily explained by improvements in any of the health metrics that were recorded.

- Deworming appeared to cause a significant shift in occupational choice, which is hard to explain.

- E.g., behavioral, road-safety interventions, long-run effects of iodine, long-run effects of iron supplementation.

- For example, let’s say you’re trying to meet a friend who calls you and says she’s on the same street at a coffee shop one block away from you. You forget to ask which direction the coffee shop is from you. If you act as if there’s a 50% chance she’s one block to the right and a 50% chance she’s one block to the left, you’ll act effectively. You might end up walking three blocks, you might walk one. You will find your friend in a reasonable amount of time regardless.

- For example, let’s say you’re trying to find the most cost-effective global health intervention. Research shows a certain vitamin supplement has huge benefits at a given dose. You’re estimating the cost-effectiveness of a program giving out supplements with a dose three times higher than that used in research. You don’t have data to calculate a dose-response curve, so instead assume there’s a 50% chance the dose-response curve is supralinear and a 50% chance the curve is sublinear.

In this case, there are good options that don’t require you to immediately put all your uncertainty in terms of precise probability estimates. You could instead talk to vitamin experts or become one yourself. Even if these options weren’t available, it would still be dangerous to force your ignorance to resolve to probability estimates. For more on that, see John D. Norton’s Ignorance and Indifference.

- This excerpt comes from Karnofsky’s Why we can’t take expected value estimates literally post (archived here).

- Preventing malaria deaths through the provision of insecticide-treated nets strikes me as an intervention that looks robustly cost-effective under multiple models. Randomized controlled trials of net provision suggest that nets substantially reduce deaths. Macro-scale evidence suggests that net provision tends to lead to declines in malaria. There’s also separate theoretical and empirical evidence supporting individual parts of the causal chain from net provision to averted deaths. Insecticides are known to kill mosquitoes. Insecticides continue to be effective when used on nets. Nets lead to fewer infectious bites. Fewer bites lead to fewer malaria cases.

- Alternatively: It’s prudent to put society in a good position to deal with potential problems when it’s possible to estimate the probabilities of those problems without extreme haziness.